GPT-3

I’ve been following OpenAI since 2015 when they announced their mission “to ensure that artificial general intelligence benefits all of humanity.” Their public experiments like OpenAI Gym blew me away and I was excited for what was to come.

Five years later, and we start seeing this “GPT-3” thing going around. What is it, why does it exist, and how does it work?

I’ve been blown away by examples from Sharif in particular. Using natural language and some “magic” to briefly describe a web app you want, Debuild generates the working code in seconds. I thought to myself: “I’ve done something vaguely similar with Figma, let me see what I can do.”

What

GPT-3 is the underlying machine learning model that the OpenAI API uses. OpenAI’s API takes any text prompt and attempts to match the pattern you gave it. It’s “text-in, text-out” meaning that you provide the API with raw text, and it gives you text back. The API is simply an interface for any app, website, etc to feed the model with some input, the model then does some stuff, and generates an output.

Why

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.

By putting the OpenAI API and GPT-3 out in the wild during the API’s beta, GPT-3 gathers more training data and gets more sophisticated, and we’re able to experiment and tinker with new ideas, use-cases, and applications that could have never been thought possible. I certainly don’t have any ML/AI background, but this puts access to these incredibly powerful models at your fingertips in an extremely flexible and easy to use API.

I always shied away from other machine learning platforms like TensorFlow because they seemed awfully complex, needing a lot of training and implementation to get up and running. With OpenAI, it’s so accessible by way of a playground to mess around, and a simple API to interface with. This is only part of why I think there’s a key breakthrough here.

How

GPT-3 has been fed lots and lots of training data over time to build up the model and be able to do what it does today. It can do Q&A, have a chat, summarize text, and much more. Here’s an example I made in the Playground, a place to interact directly with the API:

Me (providing an example): A small rectangle has a width of 50, a medium rectangle has a width of 250, a large rectangle has a width of 500.

What's the width of the medium rectangle? 250

———

AI (completing its own response):

What's the width of the large rectangle? 500

What's the width of the small rectangle? 50

It learned this immediately after I defined the first example and asked the first question with the correct response. It then completed it owns response asking for the widths of the remaining rectangles and providing the correct answers.

It’s a bit of a black box, but you’d be surprised at after only a few examples how quickly it picks up on nuanced patterns, and can go off and generate its own output.

Figma

I’ve experimented with a lot of Figma plugins:

and more!

I love the way that Figma made building plugins as easy as building a website. This is what allows me to experiment so quickly and put things together in no time. Over time as I’ve built up concepts and tried new things, I find myself looking back on previous plugin code I wrote that I can expand and build upon.

This is where “Assistant” comes in.

“Assistant”

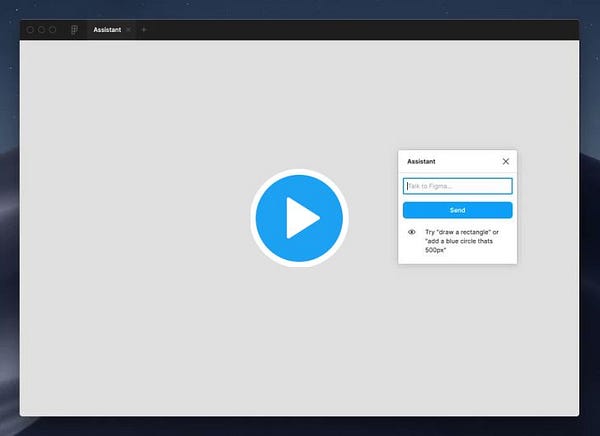

The first thing to know before I show you how I made “Designer” is to show you “Assistant.”

I’ve got a history with Natural Language Processing (NLP). I became fascinated with it back when “invisible apps” were a thing (bots you could message via text). I was building lots of random bot things back then:

Each of these used Wit, an API to convert natural language into structured output to understand what someone is trying to say. I became fascinated with this, and it enabled me to build lots of cool experiences. I wanted to push it further, and what better than to see what you can do inside of a design tool? A pointer device and a keyboard are critical for precision and speed. What if you could just tell Figma to “draw a red rectangle” it did it?

I made Assistant originally for Sketch in 2018. You could tell it a number of things like: "Draw a red rectangle that is 200px x 300px," "Add a blue circle thats 500px," or "Insert a heart icon." I open-sourced the plugin code for it. The concept was pretty simple: I trained Wit on the set of things you can tell it to help it understand shapes, colors, dimensions, positioning, etc. Once the plugin has the raw text input, it pings an API I wrote that uses Wit under the covers to determine what you’re saying, and returns a structured JSON response.

"draw a red circle 500px"

{"message":null,"metadata":{"draw":true,"shape":"circle","eval":null,"svg":null,"dimensions":{"width":500,"height":500},"coordinates":{"x":0,"y":0},"color":[255,0,0]}}The response is then interpreted by the plugin code to understand what to draw, where to draw it, what color to make it, etc.

The high-level order of operations being: raw text → API to understand intent using Wit → return a structured JSON response → Figma plugin code.

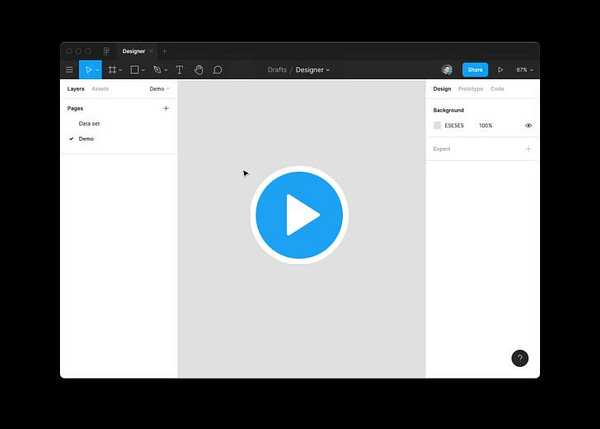

“Designer”

When I saw all of the GPT-3 concepts going around, I just had to get my hands on it. I didn’t know what I was gonna work on at first, but then it occurred to me: “I’ve done something vaguely similar with Figma, let me see what I can do.”

GPT-3 would allow the Assistant concept to go to the next level. Whereas Assistant takes a single input at a time, GPT-3 would allow for many inputs. And after training it with a few examples, it seemingly would be able to generate its own random examples and stick to the expected output format.

I trained GPT-3 via the OpenAI API Playground on a few examples, where I gave an example JSON dictionary mapping for a raw text input describing an app and its components. Beneath the dashed line is what GPT-3 was able to come up with on its own:

an app that has a navigation bar with a camera icon, "Photos" title, and a message icon. a feed of photos with each photo having a user icon, a photo, a heart icon, and a chat bubble icon

{

"navigation": [

{"icon": "camera"},

{"title: "Photos"},

{"icon": "message"}

],

"photos": [

{"icon": "user"},

{"frame": "photo"},

{"icon": "heart"},

{"icon": "chat bubble"}

]

}Whoa. I was seriously impressed. After only two examples, GPT-3 was generating its own descriptor of an app, in addition to what it thinks would be the JSON dictionary output.

The high-level order of operations being: raw text → OpenAI API + GPT-3 → return a structured JSON response (representation of the Figma canvas) → Figma plugin code.

The Figma plugin code then takes things a step further. It interprets the JSON response to layout a device frame. Order matters here, and there is little room for error. In the example demo you saw with a photo app, there are a few assumptions that the plugin is making.

The key breakthrough

The key breakthrough is the ability for GPT-3 to generate the expected JSON output given a raw text input that I trained just a few examples on. You can see in the Playground example above it starts to generate its very own app description and structured output. Whoa. This type of understanding is what allowed me to take Assistant to the next level. The plugin code needs a lot of work to generalize the canvas output and make less assumptions.

Other details: when it comes across the {“frame”: “photo”} object, it knows to fetch a sample image from the Unsplash API. And same for a named icon and pulling the SVG code from the Feathers icon library.

Overall, what you’re seeing is GPT-3 doing an incredible job of pattern matching raw text input mapped to raw text output. In this case, the input is a description of an app layout, and the output is structured JSON that maps to the description.

Putting all of the pieces together like this is what makes the OpenAI API + GPT-3 so powerful. This example happens to put the power of GPT-3 and Figma together, and there are so many more unrealized examples yet to be conceived of. You can only imagine what’s next.

What does what I saw mean?

I made the example not only to expand on the natural language example with Assistant I’ve shown in the past, but to show what’s possible with an exciting new technology in the OpenAI API and GPT-3. It highlights the importance of structured data and understanding. Not every example is gonna work, and there’s a lot of work to be done to get it to the point where it truly blows your mind. It’s not gonna replace you as a designer. Yet.

I’ll continue to explore the possibilities and come up with new ideas. You know I love to build my ideas.

I made a SwiftUI gradient effect and a SwiftUI sunset

I open-sourced a few SwiftUI macOS Big Sur example apps: Maps, Health, and About This Mac

Check out the latest Recreate post: Recreate the Messages App (iOS 14) - Part III

How do you get beta access? Only subscribed to the Waitlist? Where I get the documentation, so that I can learn before using it?

I am a PMM, not very techie, trying to get started with gpt-3. Any leads for walk-through guide?